Tertiary Education Learning Outcomes, a Case Study: “You want us to think!”

Universidad de La Laguna, Spain

Andrés Rodríguez-Marrero

anrodmar@gmail.com

Secondary School teacher, Spain

ABSTRACT

Present perceptions about the poor production of university students in the last decades might be the same other professors had in previous centuries. Nonetheless, more corseting forms of assessment and the irruption of new technologies can establish a difference. These factors serve the controversy when blamed for preventing intellectual development, or when also considering that ICTs are the personal mark of new generations of youths who face their outdated dinosaur teachers. The purpose of this paper is to provide a tentative case analysis of the situation to validate what seems a generalized perception of the decay of tertiary education. Our data will be obtained from answers that students of the third year of a Humanities English degree could not provide. In our approach we will consider the difficulties students have in reaching the highest levels of taxonomies like Bloom’s (1956) or Dreyfus & Dreyfus (1980) with their further modifications. Collaterally we will tackle key competences and forms of assessment. Results induce us to the dichotomy of maintaining the present progression or, alternatively, encouraging all to think again and take some action.

Keywords: Students’ production; ICTs; learning taxonomies; competences; education.

I. INTRODUCTION

The generation gap has often been used to justify the adverse perception many educators have about their students, especially in higher educational levels. Opinions about youths’ lack of values, laziness or reduced intellectual capacities are recurrent since ancient times. But new technologies have actually altered the scenario with their controverted use: some consider them the cause of all harms and others, instead, see them as the representative skills of new generations opposed to outdated teachers who need to be retired, or rather removed from the educational field. Converting these opinions in facts is the first obstacle to provide solutions when needed.

The purpose of this paper is to tentatively initiate an analysis from the areas of Humanities and show with data the difficulties students have in their learning process. Most of the taxonomies of skill acquisition, learning outcomes, from Bloom’s (1956), Dreyfus and Dreyfus’ (1980), and Miller’s (1990) to their consequent emendations and additions, have been further applied to hard sciences and their experimental application, not usually extrapolated to other areas like History or Linguistics. Possibly, it is assumed that soft sciences/Humanities deal with “facts” learnt by heart and which now can be found on the internet. Nevertheless, the exclusion of Humanities from taxonomies was not intended in their initial proposals, nor the limitation of the steps reached in these fields of knowledge. For our cause, these taxonomies might help precisely to determine the level reached by learners involved in these areas of knowledge.

Discourse analysis can also be of assistance when discussing the assessment questions that students have to face, considering the possibility of misunderstandings, or complete lack of understanding, in questions that try to measure the higher levels of the taxonomies. Recently, articles like Breeze and Dafouz’s (2017) have studied discourse functions and students’ answers in the final exams of a compulsory course in “Consumer Behaviour” within a Business Administration (BA) degree. In this case the course had been taught both in English and Spanish what allowed for further comparison. It seems plausible that the use of English as medium of instruction could be a determinant factor for the success or failure of students and that is something we will also contemplate in this article.

In the following sections we will approach the consideration taxonomies, competences and subject matter have in higher education. Next, we will provide the results obtained from students’ assessment, discuss them in the light of the second section to finally conclude with possible proposals for improvement if they are deemed necessary.

II. TAXONOMIES

Several taxonomies to measure skills development and learning outcomes (Bloom, 1956; Dreyfus & Dreyfus, 1980; Miller, 1990) have been developed, reviewed, adapted, mixed (Basu, 2020) with the aim of making them more specific. These, together with the competences assessment, form part not only of the Primary and Secondary education but also of its Tertiary level. Nevertheless, the fact that they appear in the programmes and evaluation systems does not mean that they are fully accepted or completely integrated in the Superior education.

Taxonomies are used more frequently in hard sciences and clinical studies, some universities indicate on their websites the one they use more widely (Karolinska Institutet, University at Buffalo, Vanderbilt University), but as it occurs with other “education frames” there seems to be an underlying assumption that certain disciplines have more applicability than others and frames function better on them. In the conclusions to their article on Community of Inquiry framework Arbaugh, et al. (2010) state:

The CoI’s assumption of a constructivist approach to teaching and learning may not align with the cumulative, instructor oriented approaches particularly associated with hard, pure disciplines [...] the framework may be more appropriate for disciplines such as education, health care, and business (p. 43, our emphasis).

In our opinion, if a Community of Inquiry is defined as “a group of individuals who collaboratively engage in purposeful critical discourse and reflection to construct personal meaning and confirm mutual understanding” (CoI Framework) no discipline should be excludedi. Within the Humanities, some areas of expertise are seen, from an external and internal point of view, as “cumulative, instructor oriented”. Many lecturers assume their work is precisely that, to lecture, while learners also assume a passive role and they only receive processed information. Bloom’s initial taxonomy (1956, p. 18) based on the six categories: Knowledge, Comprehension, Application, Analysis, Synthesis and Evaluation, was not limited to specific areas of learning, using for exemplification subjects like history, literature and music. On the other hand, while more than fifty years ago Bloom devoted pages to explain what “knowledge” could mean in his taxonomy it appears that in Humanities some still understand it as accumulating information. Thus, when in taxonomies or assessment by competences applied to Humanities the verbs “know” and “comprehend” are deployed, these are wrongly interpreted as storage of facts. As a consequence, some fields of learning (History, Philosophy, Literature…) are devaluated because storage is certainly better done by the web. Something different is the ability to search for the right content, select the adequate information, comprehend, apply, analyse…

The revision of Bloom’s taxonomy (Anderson & Krathwohl, 2001) renamed the categories using verbs with the intention of making it more dynamic and therefore more representative of the cognitive process: remember, understand, apply, analyse, evaluate and create. Here, the knowledge dimension would vertebrate the cognitive process dimension (Krathwohl, 2002, p. 215). The pyramid appears to be basically the same but the question is in which level of it our students should stop. Do we see it really as a dynamic progression, or is it the case that depending on the field of study or the student itself we should be satisfied with the results of cramming and regurgitating information? As Tabrizi and Rideout (2017) recall:

The level of expertise is organized in terms of increasing complexity, such that higher levels of expertise involve more sophisticated measurement of student outcomes. For example, the low-level of ‘remembering’ can be measured through a simple multiple-choice test, but the higher-level of ‘evaluating’ would require longer written responses, presentations, or oral discussions in order to measure the outcomes (p. 3204)

Of course, this implies not only fostering active learning, as the authors indicate, but also providing adequate feedback. In the case of subjects which use EMI (English as a Medium of Instruction), this feedback should apprehend the use of English as it should do with any other means of expression that intervenes in the student’s production. The results obtained from an enquiry made to a large sample of Spanish and Italian students by Doiz et al. (2020, p. 76 and p. 82) revealed how a 59.3% disengage content-matter from EMI and consider use of English should not form part of evaluation, an opinion that seems to be shared by lecturers. Nonetheless, the authors believe:

it is critical that decision-makers at the university establish and define language-learning objectives as part of the goals of EMI. Moreover, it is also their responsibility to provide the blueprint with the advice of experts on the field, and to allocate the means to allow the fulfilment of the objectives (Doiz et al. 2020, p. 82).

Dreyfus and Dreyfus’ (1980) taxonomy was devised primarily for flight instruction, but again the authors used also examples of language learning or chess. They consider five mental stages that lead to skill acquisition, stages of: novice, competence, proficiency, expertise and mastery. For them practice and experience are the only paths to achieve mastery:

Rather than adopting the currently accepted Piagetian view that proficiency increases as one moves from the concrete to the abstract, we argue that skill in its minimal form is produced by following abstract formal rules, but that only experience with concrete cases can account for higher levels of performance (Dreyfus & Dreyfus, 1980, p. 5).

As in the case of Bloom, this taxonomy has been reconsidered by their authors (Dreyfus, SE., 2014), combined between them (Christie, 2012) or somehow become the base of other taxonomies. Miller’s taxonomy (1990) relates the different levels of knowledge with mental stages and forms of assessment adequate in each case; Biggs and Collins’ SOLO, Structure of the Observed Learning Outcome, (1982), proposes a similar development verbalizing the possible performance of learners as their outcomes become more complex.

More taxonomies have been developed trying to adjust to outcomes, competences, standards, and all the pedagogical terminology that tries to describe the main objective any committed teacher has: to ensure students make the most of their learning.

III. CASE STUDY

For our objective we have considered questions posed in final exams of different callsii regarding the compulsory subject “History of the English Language” taught in the third year of English Studies, a degree of four years at a public university Humanities. All the subjects in this degree are taught in English with the exception of some subjects in the first year. The data have been obtained from online exercises in the years of the pandemic (SARS-CoV-2, 2020-2021) but also compared with results/pass rates of previous years. The number of students per call could vary so percentages will be offered, though bearing in mind that the subject has more than one hundred students enrolled and more than ninety took the first call. In the online exams, students were explicitly allowed to consult notes, and it was assumed that they also had at their disposal the use of internet. Time to provide the answers, short answers between fifty and one hundred words, was restricted from ten to fifteen minutes to prevent cheating as far as possible. All this had been explained to the students before the date of the exams, indicating that they would be assessed through questions that concerned the understanding of the general theory and linguistic changes applied to words different from the ones used for practise in class though with the same type of exercise structure.

Another exercise considered, was one posed during the course and consisting in the collaborative compilation of a glossary. This would constitute a resource for all students since it would include terms related to the subject, mentioned in class or not, necessitating further reading to improve their knowledge. Each student was assigned a different term and had a week time to complete the task. The definition had to be uploaded to a glossary activity in the virtual classroom so that it would be accessible to all those enrolled. Precise instructions were provided and a first example already given to show not only format but also the type of source expected to be used. Adequate acknowledgment of the sources was a specific requirement and resources to produce them also facilitatediii. At the end of each week the teacher provided feedback for each entry introducing commentaries for amendments, if necessary, with an extra week for the student to edit. Comments about the entries were also made in class.

Both, exam questions and glossary task, had the objective of evaluating the degree of evolution in the knowledge acquired by the student, from simply remembering to a maximum of evaluating, that is, from 1 to 5 in Bloom’s taxonomy. Create was not expected in these particular tasks but could be assessed in the subject by other means such as textual commentaries.

For our analysis, we have concentrated in the questions where a high percentage of students failed to provide an adequate answer or any answer at all, that is, the mark obtained for the answer was zero on a scale from zero to ten.

The three different final exams students could take had the same structure, questions that aimed at showing medium-high levels in Bloom’s taxonomy and others that could prove the understanding of the subject matter. In relation to the degree, these students could not be considered absolute novices, but regarding the subject, although they had received in previous years instruction about English literature, phonetics and grammar, the point of view of Historical Linguistics was new for them.

Table 1 shows examples of the questions posed and the Cognitive Process Dimension/--s in Bloom’s taxonomy students were expected to reach to provide an adequate answer. In this column we also include the most relevant descriptors of each process as they appear in Krathwohl’s table of “Structure of the Cognitive Process Dimension of the Revised Taxonomy” (2002, p. 215).

Table 1. Exam questions and Cognitive Process implied.

| Exam question | Cognitive Process |

|---|---|

| 1. Considering the adjectives and determinant, used in the second case, what do these Old English examples below illustrate? Justify your answer. O.E. Eald blind biscop (‘an old blind bishop’) // Se ealda blinda biscop (‘the old blind biscop’) In both examples “bishop” is in Nom. sg. and it is a masc. noun. |

Apply Implementing Analyse Differentiating Organizing Attributing Evaluate |

| 2. Describe the graphic and phonetic evolution of the following word from its O.E. period till Mod. English.

O.E. nacod (adj.) > Mod. E. naked (adj.) |

Understand

Comparing Explaining Apply Implementing |

| 3. Explain briefly two factors you consider contributed the most to the reestablishment of English as official language after the Norman Conquest. | Understand Recalling |

Question 1 expects the student to be capable of comparing the two sentences given both in O.E. and the Mod. E. translation; focus has already been established in the instruction: “considering adjectives and determinant”. Factual knowledge learnt or the search for adjectives and determinant behaviour in O.E. with their declensions, (students were allowed to use their notes and documents provided in class) should permit them to implement the information and realize the examples illustrate the forms of definite and indefinite declensions of adjectives, differentiating their use when they appear with a determinant on not. Further evaluation could have led them to say this is a typical example but exceptions might be contemplated.

Question 2 requires the application of the most relevant graphic and phonetic changes, identify them in the words by means of comparison, describing/explaining the graphic processes (Norman spelling conventions) and implementing the phonetic rules learnt (e.g.: weakening of flexions, lengthening, Great Vowel Shift).

Question 3, responds to the base process of the taxonomy, recalling information, except that they are asked to select the information they want to provide: “two factors”.

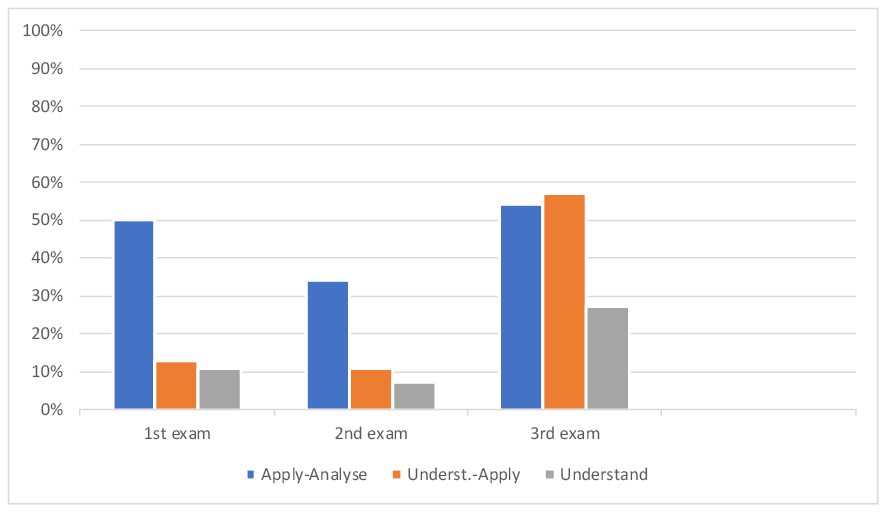

Figure 1 presents the results obtained in percentages for each of the three exams used in our analysis. Columns indicate failure (mark obtained = zero) in answering questions where the Cognitive Process Dimensions, apply-analyse, understand-apply and understand have more relevance.

Figure 1: Rates of completely inadequate answer or no answer

As it can be appreciated, students present more problems to answer those questions which involve higher cognitive processes. Solving tasks where they are required to apply a previous acquired knowledge to analyse a case given, was in the first exam an impossible achievement for half of the students who took the exam; this percentage diminishes in the second exam taken by a different group of students, but it is still quite high. Finally, the third exam was taken by students who did not take or failed the previous exams. Rather than an expected improvement, after the possibility of specific feedback for the exam, attending office hours, etc, it can be noticed how the rates increase (dramatically, for a concerned teacher) in the three types of questions. Obviously, students who seat for the July’s exam are those with previous problems but also with more experience when facing the subject matter and the exam’s questions. Because History of the English Language is taught during the first semester, they have had the opportunity of attending office hours to ask for doubts or clarification, particularly, when due to COVID-19 restrictions these were arranged online and adapted to their individual timetable and personal needs; only up to a five per cent may have used this individualized service.

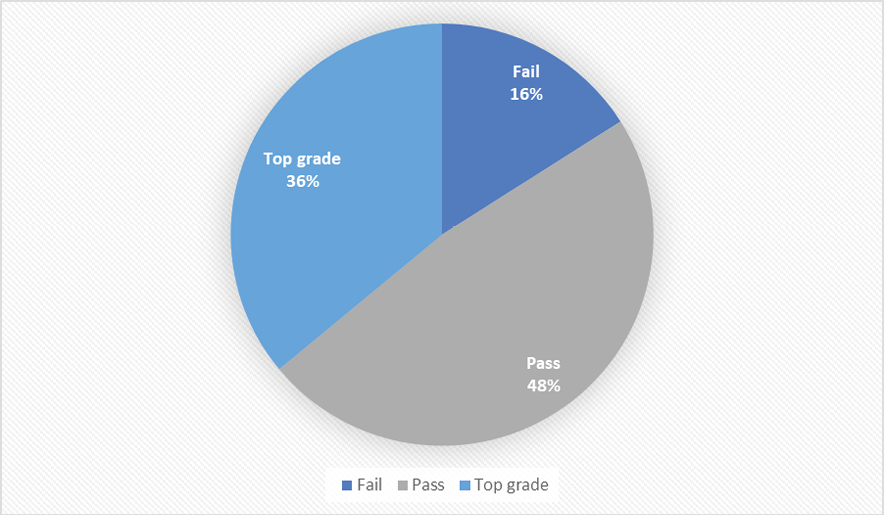

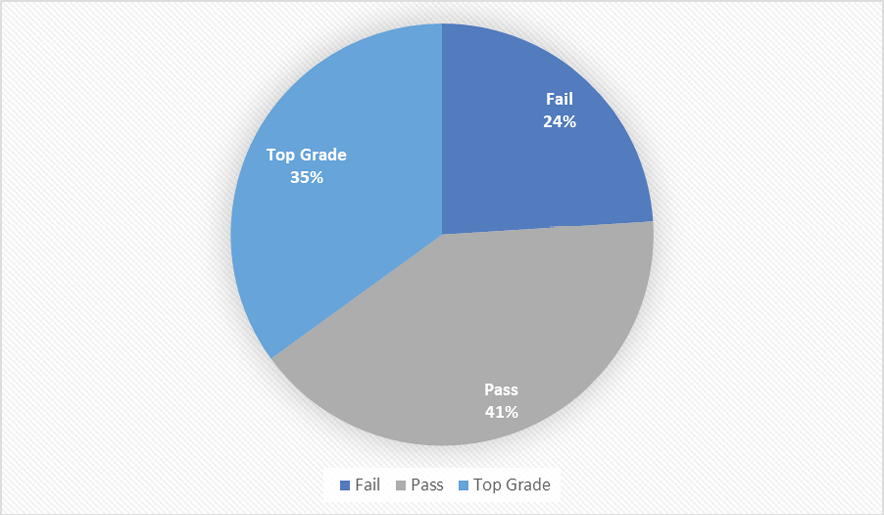

To have more even results, we also contemplated the case of the whole classroom doing the same tasks (same exercise, same question). Figures 2 and 3 present the achievements of the Glossary compiled as a continuous evaluation exercise, taking into account only those students who did both tasks, the entry for the glossary and the question about that assigned entry.

Figure 2: Grades for glossary compilation

Figure 3: Grades for glossary question.

Figure 2 shows how most of the students pass this activity but only a thirty-six percent reach the highest mark. One of the requisites was to avoid the use of Wikipedia in favour of specialized manuals and other reliable sources, most of the students who obtained a pass simply substituted the “banned” encyclopaedia by the Britannica offering very basic definitions. Those who failed gave wrong definitions, did not make the amendments required or did not provide references.

When asked about the importance of the term within the History of English, a term they had supposedly researched and included in the glossary, it can be noticed that the rate of higher marks is just one point below the previous exercise but the number of students who fail increases in eight points, nearly a quarter of the classroom fails. Again, in a non-complex task like this, the difficulties in analysing and evaluating are made clear.

IV. RESULTS AND DISCUSSION

Results indicate the difficulties students have correspond to the higher ranks of the taxonomies where they are required to process the information. Considering if this is a consequence of a poor command of the L2 language is a first premise in a degree with EMI but this does not seem to be supported by the tasks students can actually complete. It seems that identifying the focus of the question, its restriction and the instruction when this is not a simple “explain” or “describe” is a factor to ponder about. From the answers some students provide, it can be observed their reading halts in the topic: O.E., Norman Conquest, etc. but they ignore the focus, restrictions, and the instruction itself. It is only in the revision of the exam when they realize this misinterpretation: “I did not understand the question because I did not pay attention to…”. These reading problems can be transposed to their study and assessment habits. In questions where they should understand and apply, what most students actually do is “replicate”, they obviate the comparison of the terms given and search for a similar example done in class that might be superimposed. This, which could be understood as a positive comparative procedure, collapses when not being able to discriminate the elements that differ, reproducing the same written frame repeatedly for every exercise without a proper understanding of the changes concerned. Thus, staying at the lower levels of the cognitive processes does not allow them to take advantage of the use of notes, charts, or other documents they may be allowed to deploy. These, if used, are only skimmed and scanned to find a ready-made answer for cut and paste. Similar findings to our case study are the ones experimented by Shultz and Zemke (2019) from the Chemical departments of the Universities of Michigan and Minnesota where EMI does not apply as a difficulty. In order to evaluate the development of Information Literacy Skills they recorded the procedures used by a group of upper-level chemistry majors trying to solve an inorganic chemistry problem. The tendency to “broad keyword search in Google” as a method revealed that:

This use of the superficial attributes of the [chemical] compound, “brown”, “inorganic”, was in keeping with the propensity of students to use Google as a method for expedient fact finding. This approach constitutes a direct search for solutions, rather than understanding the nature of the problem or planning how to solve it. (Shultz & Zemke, 2019, p. 622)

Additionally, the same problems were detected in the choice of search engines students preferred in the compilation of our glossary. Our students would even rely on blogs or other non-scientific databases. Data provided by Shultz and Zemke (2019) correspond to years previous to the pandemic, so this could not have affected their results. In our case, it is another factor to contemplate, since a large group of students received their lessons and did their exams online.

The percentage of students who do not take the first exam has been historically quite significant in this subject, reaching eventually a fifty percent; when the exam was online that rate of NPs (no presentados, those who do not dare to take the first exam) decreased to a nineteen percent. Clearly, students felt more confident being at home with the “support” of notes, internet and their groups of social networks available. What occurred here and in other educational levels and countries is summarized in the recent headline by Fregonara and Riva (2021) for Il Corriere: “Un anno in Dadiv: più difficile imparare, più facile copiare. I prof: lezioni andate a vuoto”. This same article states that two over three students believe the marks obtained would not have changed if the exams had been held in class, but they also consider that they have learnt less. It can be said that access to the technologies or connectivity affected primary and secondary educational levels to a greater extent than that of universities during the first lockdownv. Nevertheless, it is undeniable that the lack of a face to face instruction does not permit lecturers to reach students who usually go undetected; whereas silence in a meet session sounds deeper, also allows for some leaders in the class to feel less restrained to speak up their mind immediately, either by means of the microphone or the chat (the lecturer might control the meet chat but not the students’ WhatsApp one) without allowing time for the rest, slower or more self-conscious learners, to process information and questioning. Although in a minor way, this affects the group which is induced to a state of laziness awaiting for the answer to be provided by the teacher or the “classmate” who is more daring but not necessarily the more prepared.

The rate of success in the first in-class, face-to-face, exam before the pandemic (Jan. 19-20) without notes or any other extra help, was of a twenty-nine percent; the rate for the online exam of January 20-21 was of a forty-one percent; those who failed rose from a twenty-two percent of the year 19-20 to a thirty-nine percent of 20-21. Overconfidence and a lack of proper preparation could have had an influence in this first exam but rates did not improve for those who had a second opportunity in July when only a twelve percent passed.

When asked for their opinion over the years, students do not usually complain about the interest of the subject, lectures or material provided, the recurrent problem they mention is that in order to pass this subject they are required: “to think” (sic). Providentially, this requirement is present in other subjects and levels from which teachers express, or used to express, their astonishment, since in the last decades this type of commentary uttered by secondary and university students has become more frequent. This mutual dissatisfaction becomes more apparent when they are assessed by means of something that is not a simple multiple-choice quiz.

The development of cognition skills requires more than minimalist questioning and it is obvious that something fails when exercises like compiling a glossary require a second part where students are asked to explain the importance of the term assigned within the subject. If students had achieved a satisfactory maturity, they would have included such importance in the definition itself, but most students did not. Inquiry-Based Learning and Problem-Based Learning (Savery, 2015) can be used, have also been used in Humanities, to develop critical, evaluative capacities. Perhaps this strategy is somehow being left behind and should be retaken.

The problem we are confronting is not that of having acquired the subject matter of Chemistry, Linguistics, History, Infirmary or any other specialization, the problem lies on the person’s capacity of thinking, pondering on any real problem they may face in their professional life, in their lives.

Learning and assessment by competencies initially tackled this issue. The key competencies as set in the European Reference Framework (European Parliament 2006, L 394/14) are all interrelated, indicating that: “critical thinking, creativity, initiative, problem solving, risk assessment, decision taking, and constructive management of feelings play a role in all eight key competences.” In Bloom’s taxonomy, although there is emphasis in the order of the categories there is also interrelation. Compartmentalizing the different aspects of learning might end in a “non-learning to learn”. Not only this, but also the isolation of different axes of the process can lead to undesirable results. If to assess the Digital competence of a student we concentrate only in the fact that he/she was able to upload something but we do not incorporate the quality of how and what was uploaded, we might be fostering stand-still learners always at the bottom of the taxonomy. If those are the type of European citizens we want to adapt to the economic needs of a globalized world, we (unfortunately for many) are on the right road. The statements of Hirtt (2010, p.108) who considered OECD educational policies a failure that would continue to this day only concerned with maintaining a cheap labour market, would then become true:

Esta evolución del mercado laboral ilustra claramente el discurso dominante sobre la “sociedad del conocimiento”. Y esto tiene consecuencias radicales para las políticas educativas. La OCDE (2001) se ve obligada a reconocer cínicamente que “todos no estudiarán una carrera en el dinámico sector de la “nueva economía” – de hecho la mayoría no lo harán- de manera que los programas escolares no pueden ser concebidos como si todos debieran llegar lejos” (Hirtt, 2010, p. 108)

If, on the other hand, the objective is to integrate and promote a learning that is not exclusive, only granted to a minority, something has to change.

The frustration felt by students is also felt by teachers who are likewise evaluated in terms of the rates of their success. So, either if the instructor knows much about TICs or not, a multiple-choice quiz is a handy tool that seems more objective, does not allow for many complaints and renders fast, good results. In terms of feedback, if any, with this type of exercise the learner usually receives, once more, a prefabricated fact. It is not a matter of demonizing neither new technologies nor competences but making a better use of them. From our results it can be appreciated that many students are left behind, those students need proper feedback to improve their results. “Leaning to learn” is not absolute autonomous learning in terms of uploading tasks whose finality is to be stored in the teacher’s cloud: “This competence means gaining, processing and assimilating new knowledge and skills as well as seeking and making use of guidance.” (European Parliament 2006, L 394/16). Many of those who reach the Tertiary level of education have not achieved this key competence and they are not even capable of seeking and making use of guidance, all they are accustomed to is to copy word by word the “right” answer. On the other hand, many of those involved in public education have a rate of students per class that does not allow for proper tutoring of those in need and willing to advance.

V. CONCLUSIONS

This case study had as its main objective delimit the difficulties a group of university students had in their learning process. We believe that this case can be extrapolated to other subjects and degrees and thus enlarged to compare the results obtained. Suggestions from previous literature and shared knowledge would prove real if findings are similar.

The assumption that the market does not need people capable of thinking is the worst omen possible, then, taxonomies, competences, standards and all the pedagogical terminology would exist only for classification of cheap and “qualified” labour.

The survival of critical thinking in Tertiary education requires a holistic approach, including the previous levels of schooling, with a strong commitment by all the concerned educational assets, starting by those who are the real instructors. It compels the improvement of reading with real, not pretended, discussion to produce not just regurgitate; implementing the original taxonomies without leaving behind any category or axis. The use of new technologies to the advantage of teachers and students means the improvement of real learning that allows for comparison, discrimination and evaluation of sources. Particularly, in the field of Humanities Inquiry-Based Learning and Problem-Based Learning have to be retaken, they are not exclusive of the “other” sciences. Additionally, promoting and allowing for feedback does not imply the learner is not autonomous, it is a form of validating his/her findings, the basis of science.

VI. REFERENCES

Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Longman.

Arbaugh, J.B., Bangert, A. & Cleveland-Innes, M. (2010). Subject matter effects and the Community of Inquiry (CoI) framework: An exploratory study. Internet and Higher Education, 13, 37–44.

Basu, A. (2020). How to be an expert in practically anything using heuristics, Bloom’s taxonomy, Dreyfus model, and building rubrics for mastery: case of epidemiology and mountain bike riding. Qeios. https://doi.org/10.32388/BTH202.

Biggs, J. B., & Collis, K. F. (1982). Evaluating the Quality of Learning – the SOLO Taxonomy. Academic Press.

Bloom, B.S. (1956). Taxonomy of educational objectives. Longmans.

Breeze & Dafouz (2017). Constructing complex Cognitive Discourse Functions in higher education: An exploratory study of exam answers in Spanish- and English-medium instruction settings. System, 70, 81-91.

Christie, N.V. (2012). An Interpersonal Skills Learning Taxonomy for Program Evaluation Instructors. Journal of Public Affairs Education, 18(4), 739-756, https://doi.org/10.1080/15236803.2012.12001711

CoI (n.d.) CoI Framework. https://coi.athabascau.ca/coi-model/

Doiz, A., Costa, F., Lasagabaster, D. & Mariotti, C. (2020). Linguistic demands and language assistance in EMI courses: What is the stance of Italian and Spanish undergraduates? Lingue Linguaggi, 33, 69-85.

Dreyfus, S.E & Dreyfus, H. (1980). A five-stage model of the mental activities involved in directed skill acquisition. Operations Research Center, University of California.

European Parliament and the Council of the European Union. (2006). Recommendation 2006/962/EC of the European Parliament and of the Council of 18 December 2006 on key competences for lifelong learning, Pub. L. No. 2006/962/EC, 394/10. https://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=OJ:L:2006:394:0010:0018

Fregonara, G. & Riva, O. (2021, July 9). Un anno in Dad: più difficile imparare, più facile copiare. I prof: lezioni andate a vuoto. Il Corriere.

Hirtt, Nico (2010). La educación en la era de las competencias. REIFOP, 13 (2), 108-114.

Krathwohl, D.R. (2002). A revision of Bloom’s Taxonomy: An overview. Theory Into Practice, 41(4), 212-218. https://doi.org/10.1207/s15430421tip4104_2

Miller G. E. (1990). The assessment of clinical skills/competence/performance. Academic medicine: journal of the Association of American Medical Colleges, 65(9 Suppl), S63–S67. https://doi.org/10.1097/00001888-199009000-00045.

Neumann, R. (2001). Disciplinary differences and university teaching. Studies in Higher Education, 26, 135−146.

Savery, J. R. (2015). Overview of Problem-Based Learning: Definitions and Distinctions.

In A. Walker, H. Leary, C. Hmelo-Silver & P. Ertmer (Eds.) Essential Readings in Problem-Based Learning: Exploring and Extending the Legacy of Howard S. Barrows (pp. 5-15). West Lafayette: Purdue University Press.

Shultz, G. V. & Zemke, J. M. (2019). “I Wanna Just Google It and Find the Answer”: Student information searching in a Problem-Based inorganic chemistry laboratory experiment. Journal of Chemical Education, 96, 618−628.

Tabrizi, S. & Rideout, G. (2017). Active learning: Using Bloom’s Taxonomy to support critical pedagogy. International Journal for Cross-Disciplinary Subjects in Education (IJCDSE), 8(3), 3202-3209.

Received: 9 October 2021

Accepted: 3 November 202

Notes

i The authors distinguish two axes: a vertical one of Pure and Applied disciplines (depending on its degree of applicability) and a horizontal one corresponding to Hard and Soft connected with the “level of paradigm development” (2010, p. 44). For further clarification see Neumann (2001).

ii Students can take three exams during the academic year in order to pass a subject. One at the end of the semester, where they can choose between two different dates, a second one before the summer holidays and a third one in September. Students have six opportunities, plus an extraordinary one, in total to pass a subject.

iii During the second year of the degree, students have “Academic Writing” as a compulsory subject, which includes learning how to avoid plagiarism and compile a proper reference list”. The resources provided were thought to be a reminder.

iv In Italian Dad stands for “didattica a distanza”.

v During the first lockdown, the Universidad de La Laguna provided students in need with laptops and internet connectivity: “La ULL presta 375 ordenadores, 390 conexiones a internet y 48 webcams a su alumnado en su proyecto “Brecha Digital” https://www.ull.es/portal/noticias/2020/ull-prestamos-brecha-digital/